MY ROLES

UX Design, UX Research, Visual Design, Video Editing

DESIGN PROCESS

LEARNING OUTCOMES

+ How to conduct effective user interviews with DHH users

+ Testing out hypothesis based on data analysis and research with

the feedback from the end-user

+ Translate user feedback to effective LoFi and HiFi prototypes

+ How to diversify personas so the design solution is applicable

in multiple scenarios

+ Conducting effective usability testing sessions based on design

objectives

+

BACKGROUND RESEARCH

DHH Users in 2018

Primary Challenges

+ A language barrier exists between the hearing community and the

deaf and hard-of-hearing (DHH) community.

+ American Sign Language (ASL) has its own grammar, vocabulary,

and expression-based context.

+ While there are a number of different creative solutions that the DHH

community has at their disposal, many of these are ineffective in the

context of small group conversations or predicated

on a DHH participant’s proficiency with written English.

+ Inability to effectively communicate which can have a significant

negative effect on academic performance as well as create feelings

of loneliness, isolation and frustration.

+ DHH users can feel self-conscious and ostracized when using an

assistive device in a public setting"

Conversations based on Situations

Our background research highlighted specific situations in

the daily lives of DHH users where they would need to communicate

with hearing users who did not know ASL.

Each situation presents its own set of challenges depending on

the complexity of the conversation.

Technical Interpreters

Our research also uncovered the importance of technical ASL

interpreters. Technical interpreters need to have the following skillsets

Specific subjects of interpreter

These are some of the areas that often require interpreters with

knowledge of the subject matter being discussed.

COMPETITION ANALYSIS

Mobile VRS (Video Relay Service) Apps

We studied several mobile VRS (Video Relay Service) apps that are

designed for communication between hearing and DHH users. Our

objective was to find common features in all of these apps and identify

solutions that have not been incorporated as opportunities for BrickTalk.

USER RESEARCH

User Interviews

We began our process with a one-on-one interview with a DHH

user. We wanted to build empathy with the user by understanding

the challenges he faces on a daily basis.

We had a series of interviews with the user including evaluating our

ideas, sketches, and our prototypes.

The interview and usability evaluation sessions were conducted in

the Future Everyday Technologies (FET) lab at Rochester Institute of

Technology

Interview Insights

DESIGN OPPORTUNITIES

Combining the data we acquired from the background research,

competition analysis and the user interview, we made a list of design

opportunities.

JOB STORY

DESIGN OBJECTIVES

+ Incorporate a solution that can grant access to an interpreter

anywhere and anytime

+ Design a solution that allows users to have contextual

conversations based on specific subjects (like 'Law' or 'Engineering')

+ Time and location cannot be hinderances that prevent users from

having a conversation

+ Extend the solution to a group setting that includes both DHH and

hearing users

+ The solution needs to be primarily video-based

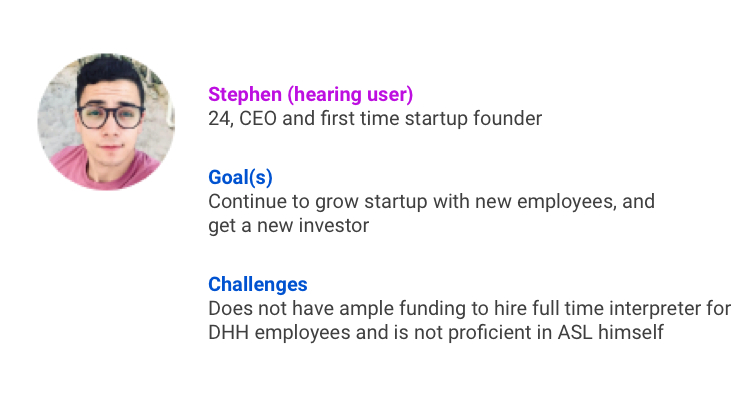

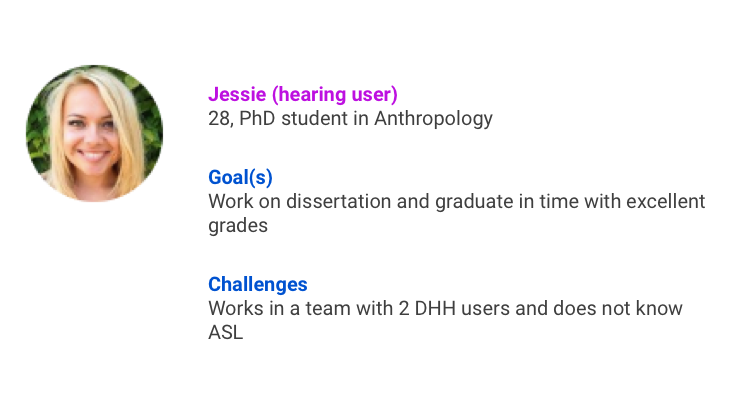

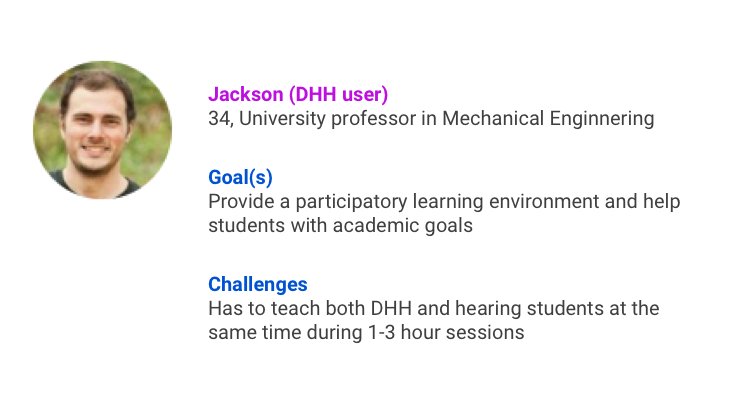

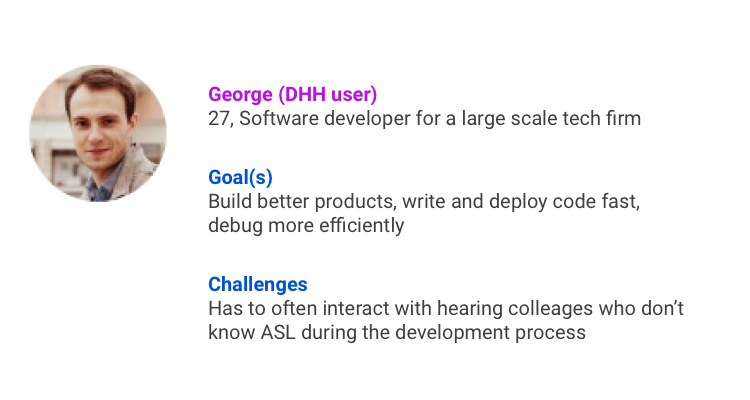

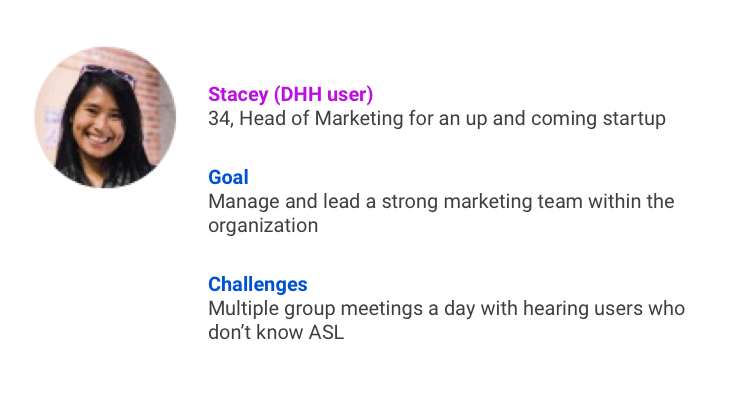

PROTO PERSONAS

Based on the background research, competition analysis and insights

from the user interview, I created a set of proto-personas to gain a very

basic understanding of the goals and challenges faced by both DHH

and hearing users in both the workplace as well as academic contexts.

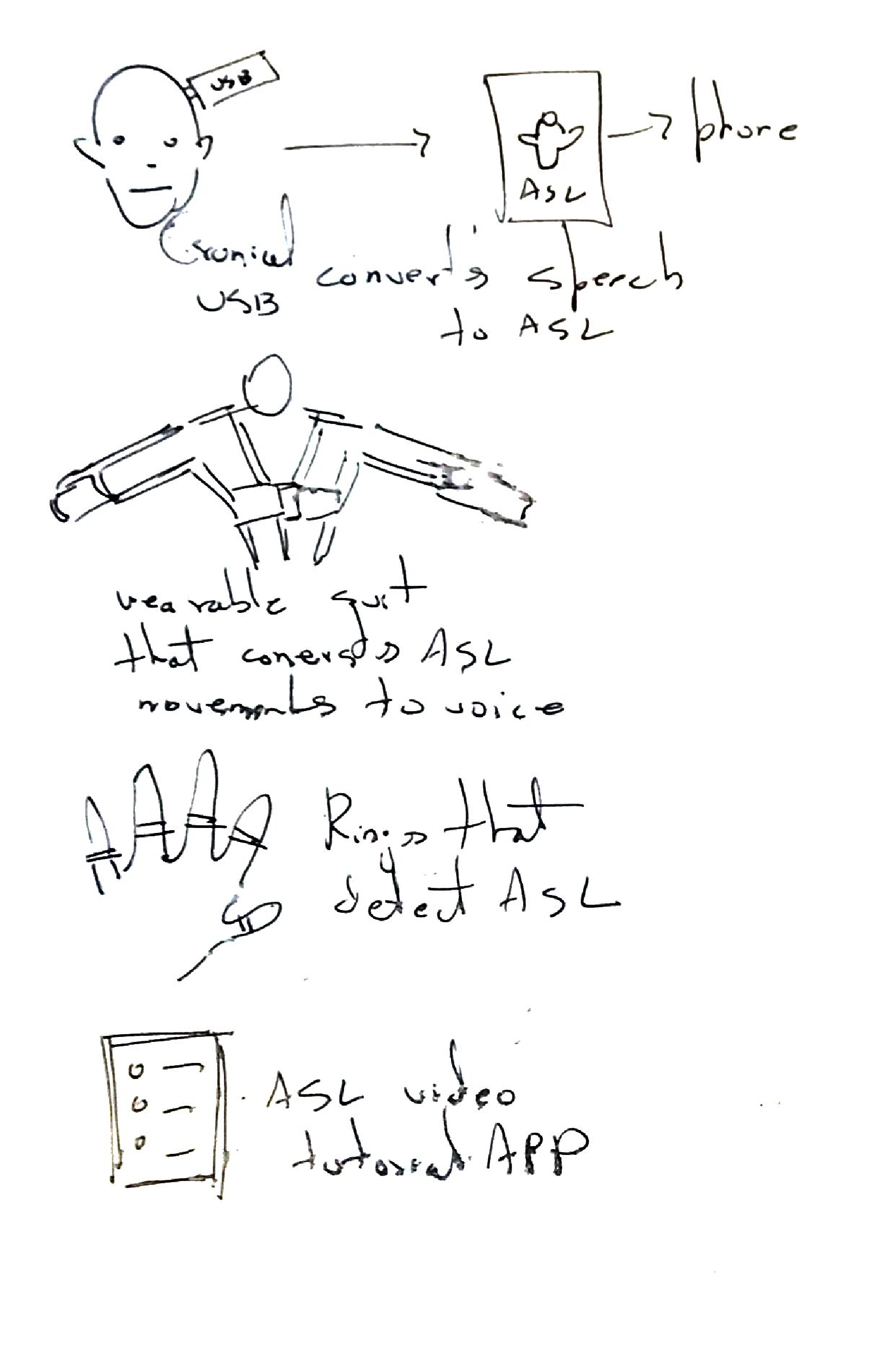

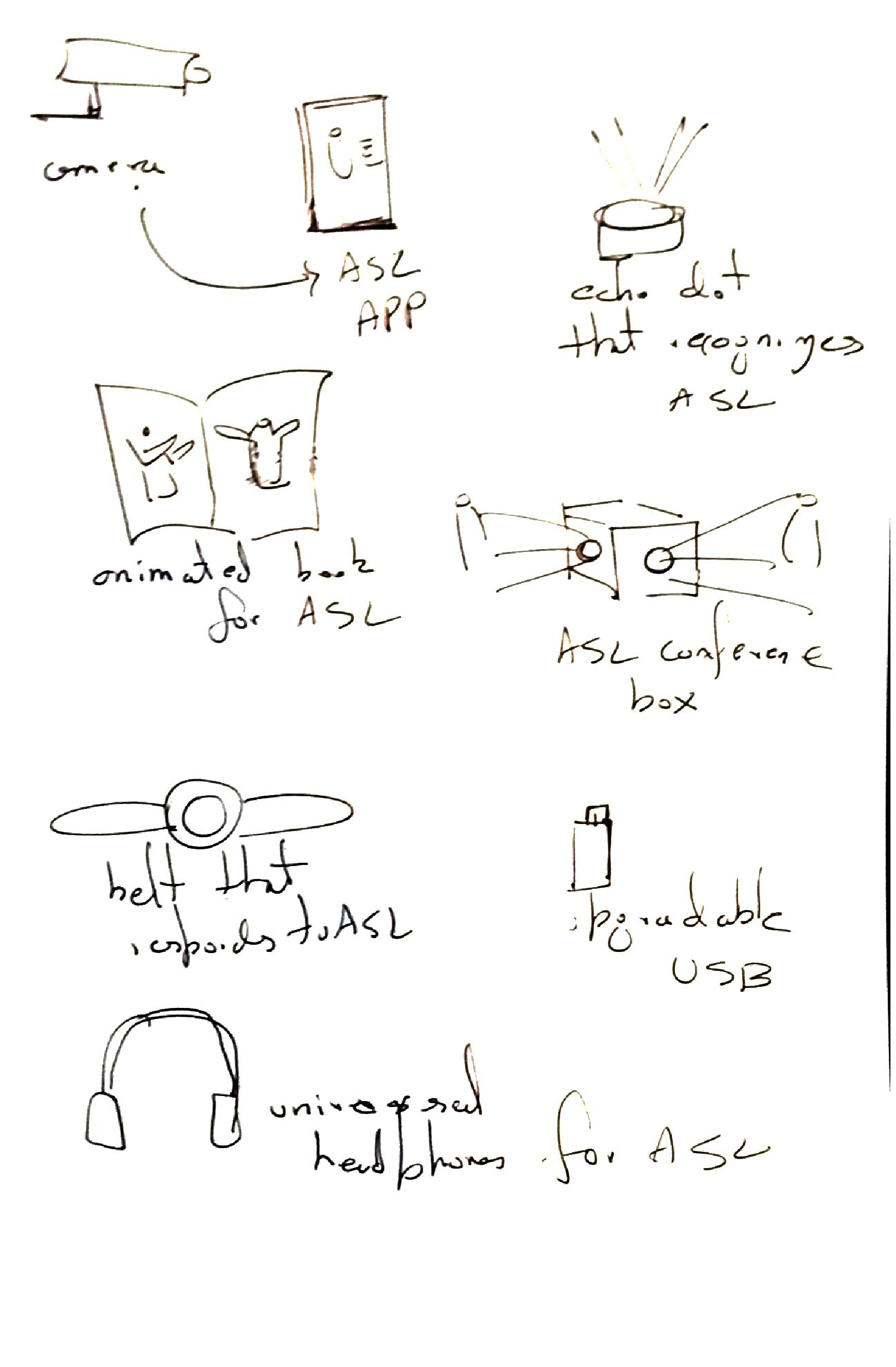

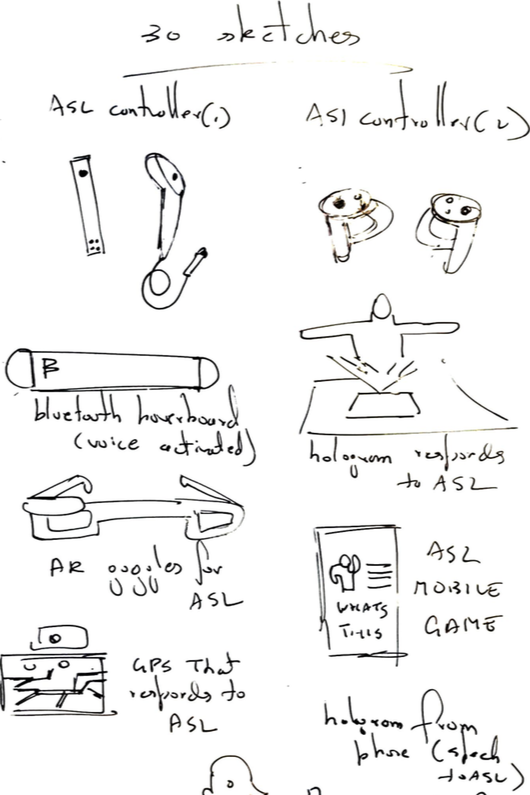

BRAINSTORMING IDEAS

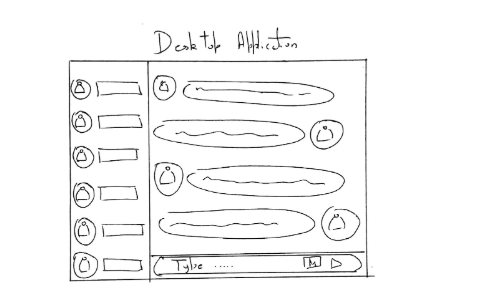

Initial Sketches

Once we defined the target personas along with the use-cases and

a conceptual model, we came up with a series of sketches.

The Final Sketch

Each team member came up with their own sketches which were

then converged into one sketch based on the most effective solutions.

Our final sketch was a mobile and desktop application that allowed

users to request ASL interpreters at any time. This would be a videochat

application between for one-to-one or group communication with an

interpreter included in the chat who would sign for DHH users and

translate ASL to english for hearing users.

PERSONAS

Based on our proto personas and our design opportunities we created

three personas to represent our target users.

STORYBOARDS

We decided to base our story on a primary use case for each of our

primary personas.

This was done in order to create empathy for the

target users by visually highlighting the challenges they face when it

comes to communication during specific scenarios such as team

meetings.

SCENARIO 1

The software development team of 'TechDojo', a software startup in

Seattle that makes accounting software is communicating with their

CEO using 'BrickTalk' regarding an emergency situation with their

product.

SCENARIO 2

A PhD student is doing a study that involves a DHH user and hearing

user conversing with each other using a third-party app. She uses

'BrickTalk' for this study.

SCENARIO 3:

An accomplished entrepreneur and inventor who is also a DHH user is

using 'BrickTalk' to converse with a potential investor for his latest

startup.

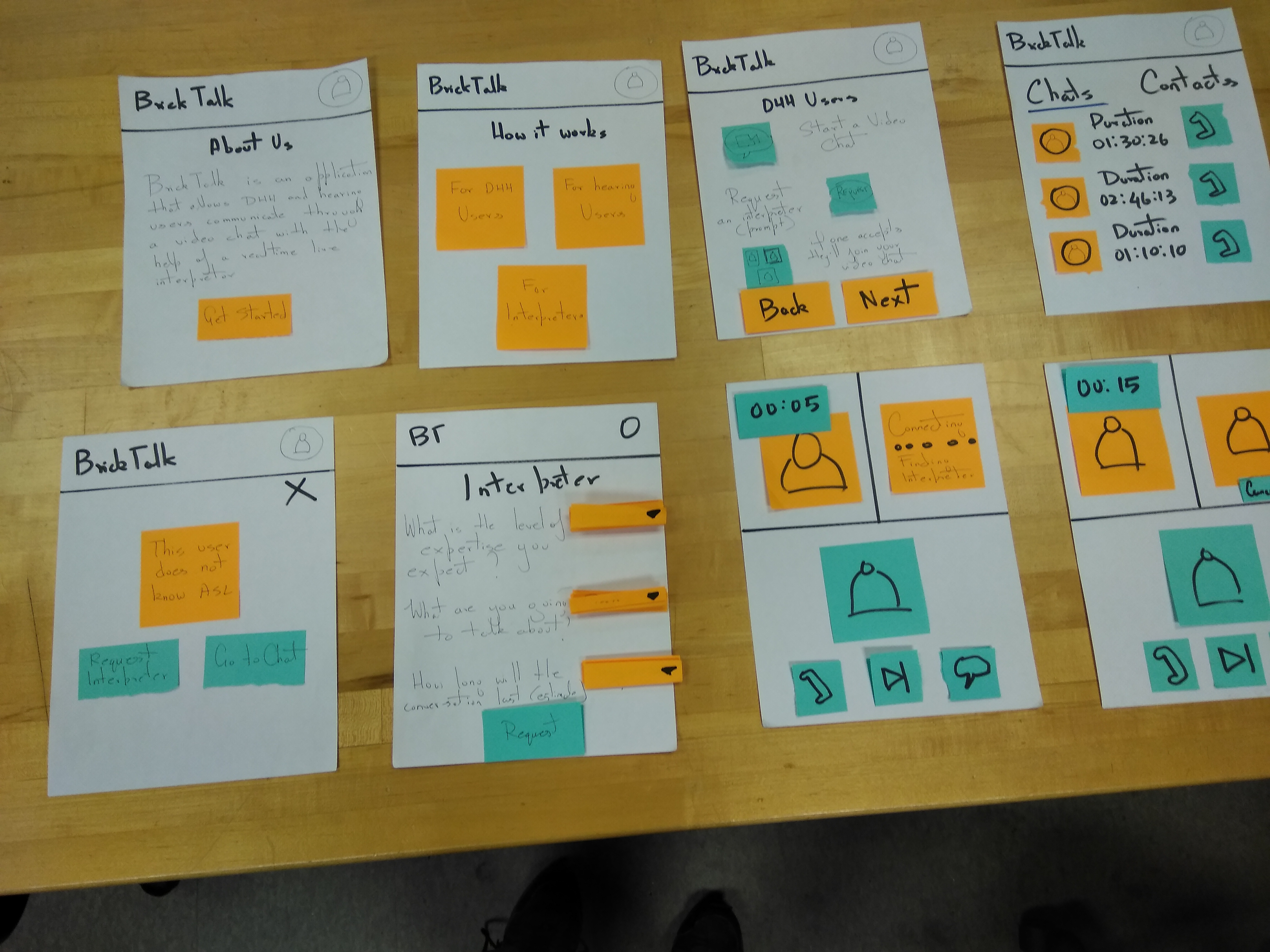

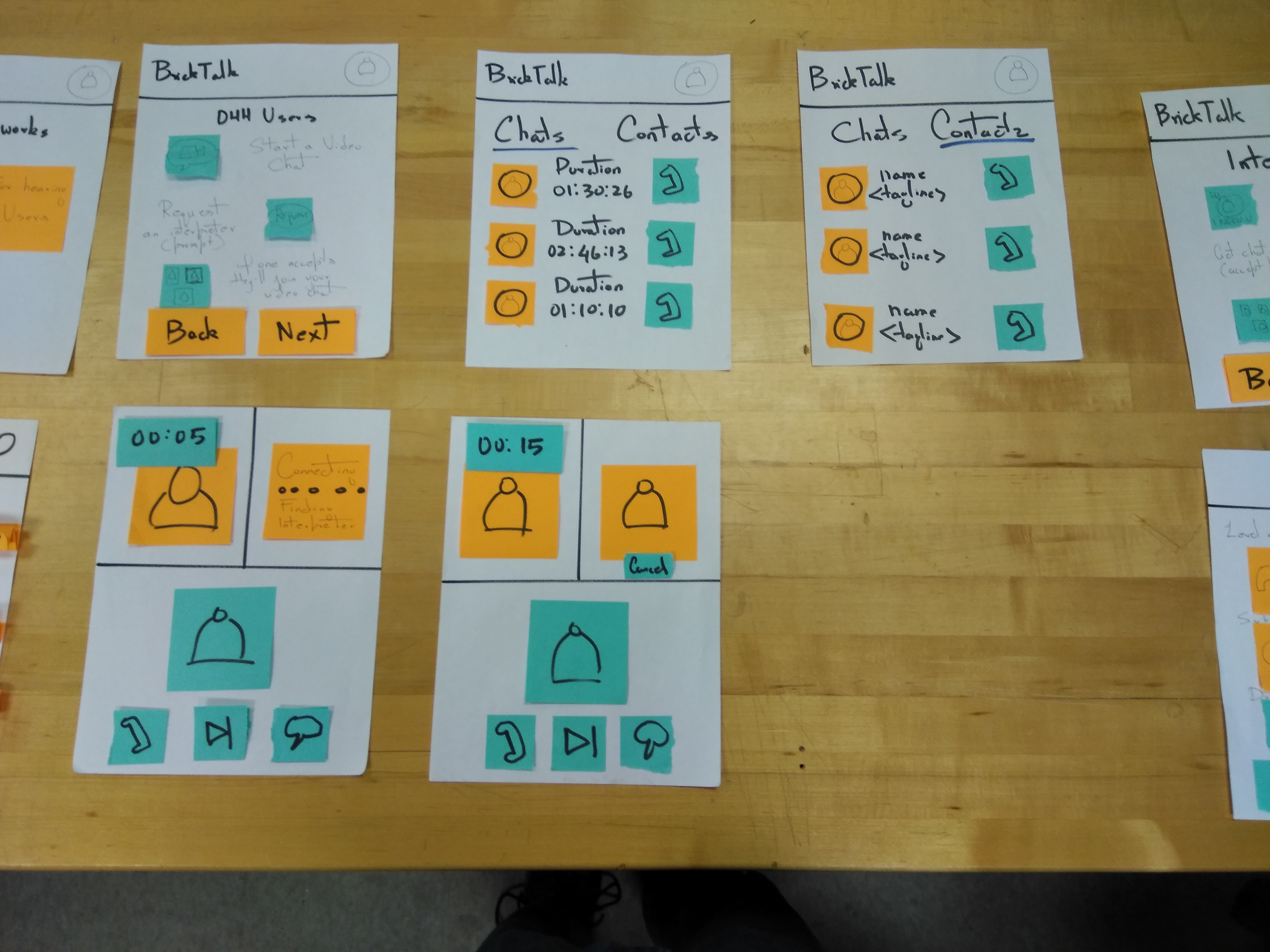

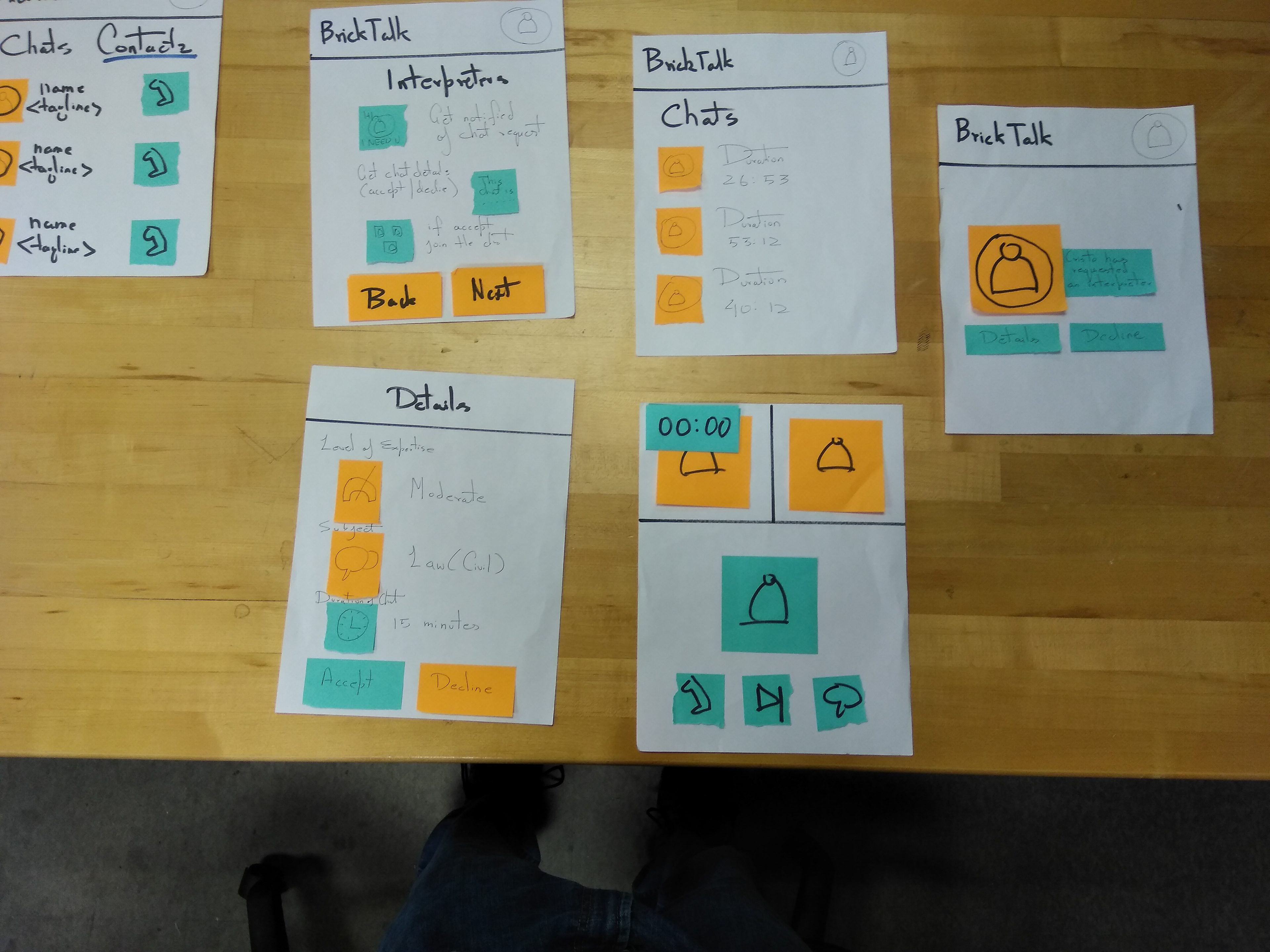

Paper Prototype

We used A4 sheets and post-it notes for our paper prototype because

we wanted to simulate actual interaction with the interface. One of the

challenges we noticed with the paper prototype during our testing

sessions was that our key stakeholders were not able to distinguish

between interactive elements like buttons and static elements like text,

video and images because they were all on post-it notes.

High-Fidelity Prototype

I decided to go with a minimal design for the high-fidelity prototype.

The user in this scenario is a hearing user and would like to converse

with a DHH user. The first screen shows the chat history as well as list

of contacts in alphabetical order.

Once a user decides to videocall a DHH user, a notification appears

which reminds the hearing user that an interpreter would be required

for this conversation.

The user can choose to continue with the call if they know ASL or

request an interpreter that will join them in the call.

The user would now need to select the level of expertise required.

There are instances when expert interpreters may not be available, so

the user would have to revert to an available interpreter. If the

conversation is brief and does not entail complex words, the user can

revert to a less experienced interpreter.

The user would then specify which topic the conversation is based on

and what the prescribed chat duration is.

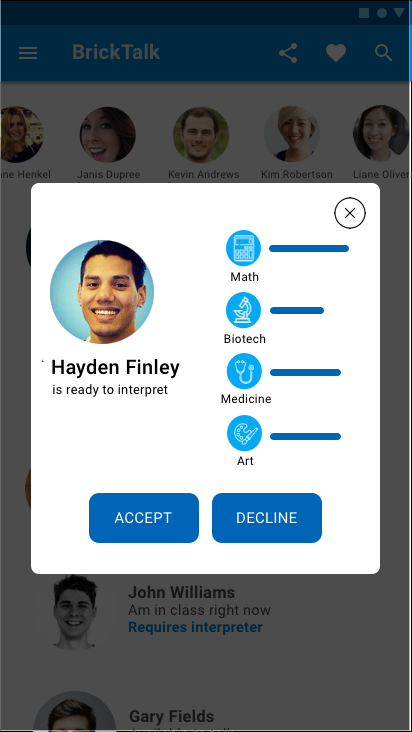

Once the system finds an available interpreter who agrees to the

session, the user can see their individual ratings on several areas (as

rated by previous users), and choose to accept or decline the

interpreter's offer.

If they accept, they start the chat. Otherwise the user is reverted back

to the previous screen of inputing details.

The user will be redirected to a chat window. The window at the top

left is the DHH user since the screen is from the perspective of the

hearing user (the windows will be reversed from the screen of the DHH

user).

The top right window is the interpreter who has joined the call and

signs for the DHH user and speaks for the hearing user.

The app is also applied to a group chat setting. We designed this app

to accommodate a maximum of 5 users (excluding the interpreter)

because it would be too challenging for the interpreter to sign for

multiple users especially if they speak out of turn too frequently (an

issue that we predict will turn up)

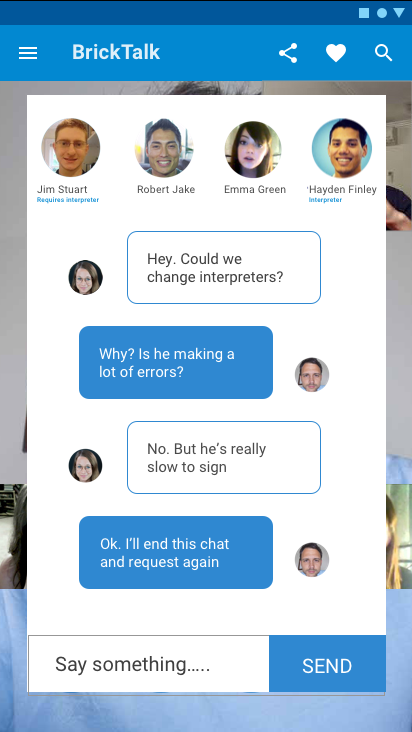

I also designed an in-built chat feature for the sole purpose of the DHH

or hearing users using it as a secondary means of communication if the

interpreter is not proficient and a new chat is started with a new

interpreter. The chat feature takes appears as a modal overlay so the

chats need to be very brief so as to not disrupt the regular videochat.

I finally designed a tablet and a desktop version of the app for

larger screens.

Usability Testing Feedback

We finally conducted a usability evaluation with our key stakeholder who interacted with our high-fidelity prototype. We set up two scenarios and assigned a set of tasks for each scenario.We were able to get the following feedback from the testing sessions.